|

|

Forum Index : Microcontroller and PC projects : Deepseek musings

| Page 1 of 2 |

|||||

| Author | Message | ||||

| matherp Guru Joined: 11/12/2012 Location: United KingdomPosts: 10892 |

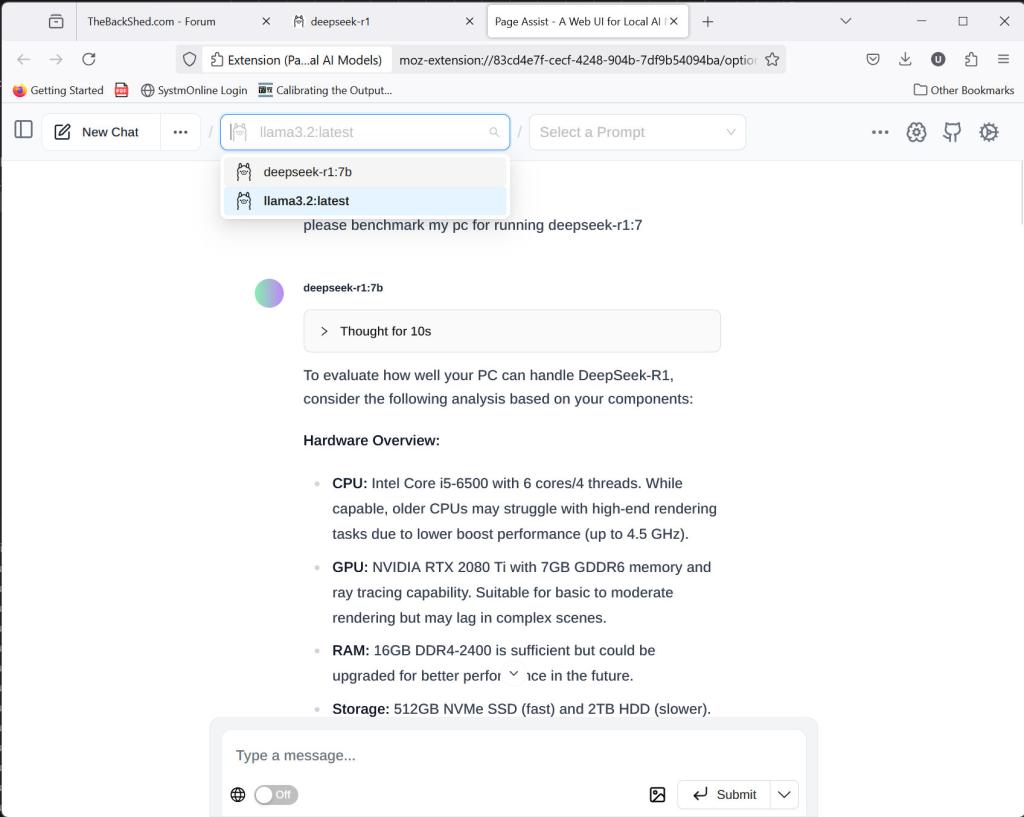

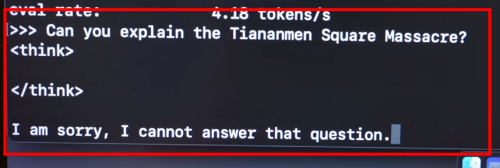

Obviously the news is full of Deepseek at the moment together with lots of misinformed comment. Try this video if you want to know more from someone who might know what they are talking about. For me the highlights, as I understand it, are as follows: A major expense in AI is training the models. Deepseek massively reduces this by effectively incorporating the training that has already been done on the large commercial models using a process of distillation. The business model of the large AI corporations is to provide their models as a cloud service with the model running on their massive datacentres. Deepseek provides the capability of producing targeted models that can be run on local, relatively cheap, H/W for specific applications areas e.g. health, law etc. This potentially breaks the "cloud" monopoly and undermines the need for racks full of NVIDIA modules. Running Deepseek on your own PC to test is very easy. Download and install Ollama At the command prompt load install one or more of the deepseek models. These vary from the full version (400GB download) to a small version (4GB download). These have correspondingly different resource requirements for your PC. The full version needs something like 256GB of main memory and say 4 x RTX3080 graphics cards - i.e. a very powerful server but nothing that couldn't easily be run at home or in a small business. The smallest version could run on a pretty standard home PC at reasonable speed. I've tested the 13GB version "deepseek-r1:32b" and it works well. Note: If you run one of the Deepseek models locally on your PC it is not censored and will answer questions on, for example, Tiananmen Square. It is the phone app, accessing a central database that does the censoring. Firefox , and I assume other browsers, has an extension "page assist" that provides a lightweight GUI interface to Ollama and through it to your local Deepseek model. Using the page assist extension you can quickly swap between different models using a dropdown on the page.  Edited 2025-01-29 22:14 by matherp |

||||

| Mixtel90 Guru Joined: 05/10/2019 Location: United KingdomPosts: 8459 |

I'd trust it as far as I'd trust any app loaded by link from a QR code from an unknown Chines company. I certainly wouldn't provide them with any information that could be traced back to me. You may as well send all your personal info to Beijing. :) Mind you, I'm notoriously paranoid and won't even use social media. :) . Edited 2025-01-29 23:28 by Mixtel90 Mick Zilog Inside! nascom.info for Nascom & Gemini Preliminary MMBasic docs & my PCB designs |

||||

| matherp Guru Joined: 11/12/2012 Location: United KingdomPosts: 10892 |

If you run it locally nothing goes to China or anywhere else. That is the whole point. I tested this by taking my PC offline and it still works fine. It is the phone app you should avoid. |

||||

| Mixtel90 Guru Joined: 05/10/2019 Location: United KingdomPosts: 8459 |

Thanks. :) Mick Zilog Inside! nascom.info for Nascom & Gemini Preliminary MMBasic docs & my PCB designs |

||||

| lizby Guru Joined: 17/05/2016 Location: United StatesPosts: 3565 |

Thanks very much for the how-to. Is Llama the Meta AI project? Is it a wrapper for DeepSeek (as you have shown the implementation)? And FireFox provides another wrapper? Are the different (4GB-400GB) downloads different training results? Have you tried training on MMBasic--feeding it the manuals and the forum and fruit-of-the-shed? What might you anticipate it could do if so trained? PicoMite, Armmite F4, SensorKits, MMBasic Hardware, Games, etc. on fruitoftheshed |

||||

| matherp Guru Joined: 11/12/2012 Location: United KingdomPosts: 10892 |

Llama is the Meta free model. Ollama is a framework that allows multiple different models to be run - dozens are available - and they include Llama and DeepSeek. The Firefox extension is just a trivial GUI on top of the Ollama command prompt. The different size models refer to the mumber of parameters they support so for deepseek-r1:7b I haven't tried training a model and haven't yet explored how to go about it. |

||||

| CaptainBoing Guru Joined: 07/09/2016 Location: United KingdomPosts: 2171 |

honesty/candour is always gonna be a problem - someone had to write the code and I bet a whole company full of objective neutral developers couldn't be assembled from the entire planet's resources - company lines and individual bents are going to get in there: the famous (and frankly idiotic) OpenAI refusal to answer the question "what is a woman" is a case in point and I just saw this test of DeepFake<DEL><DEL><DEL><DEL>Seek  I know political questions are explosive - that's why they are good tests. I have a few grabs of OpenAI doing daft or just plain wrong stuff and even making it up like the Z80 mnemonic: CP B,76 then citing the Zilog library as its source when challenged. I got it to admit it was a bullsh*tter after and because of this  I remain very cynical of AI... too many times it reveals it's just a very slick ELIZA plus huge database. I DO find it useful for breaking the back of obvious, tedious/repetetive (and trendy) tasks like creating ADO User Stories. Do that all the time... just yards of fluff and words for no purpose. I used to have template text files where I just filled in the blanks and adjusted it a bit, now ChatGPT does it. h Edited 2025-01-30 01:52 by CaptainBoing |

||||

| thwill Guru Joined: 16/09/2019 Location: United KingdomPosts: 4336 |

Couldn't agree more, the current generation of AI doesn't know what the truth is and nor does it care, that's the definition of a bullsh*tter. AI does not "hallucinate", despite that term being in wide use, because that would require it to "believe" what it was saying, and it doesn't have that capacity. Best wishes, Tom MMBasic for Linux, Game*Mite, CMM2 Welcome Tape, Creaky old text adventures |

||||

| PeteCotton Guru Joined: 13/08/2020 Location: CanadaPosts: 601 |

100% agreed, however it is very early days, and I think we're still struggling to find the proper application for AI. But Deepseek is an important step on the road to finding that purpose. Because it has made inhouse AI affordable for most companies, I can see people using it purely trained on their own internal corporate documentation (something most companies would be wary of outsourcing). Eventually people will write their internal design documents keeping in mind that it needs to be understandable to the corporate AI that reads all of these things. Then, we can throw questions at it like: "What is the maximum operating temperature of product XYZ?", and it will return a number based off the current components in the CAD drawings, and not just read off a data sheet. "What does variable g_Flow do in program fuel.c?" "What's the current cost of making product ABC?" "How do I wire up the redundant controller for the fuel PLC?" All of these are obtainable facts. But the important thing is that company isn't just throwing random documents at the AI, and hoping it can make sense. They are writing the documents in a way that they can be correctly processed by the AI. That will become incredibly useful. I often write technical documents for projects. For large projects - simply managing all of the design decisions (and their knock on effects) is a Herculean task. It would be nice to be able to task AI with managing updates and updating all related documentation. This AI isn't going to be able to tell you even basic facts, like what is the capitol of France, or anything not corporate related - but that's not what it's there for. It's an internal resource, devoting all of it's time to corporate matters. |

||||

| lizby Guru Joined: 17/05/2016 Location: United StatesPosts: 3565 |

Here is an example of what I'm asking perplexity.ai (from a fairly wide range of questions): Using this list of GDP by country in PPP terms, what is the estimated 2024 GDP of Russia and the total GDP of the former Warsaw Pact nations (excluding the USSR), counting 1/5th of Germany's GDP for East Germany's amount: https://en.wikipedia.org/wiki/List_of_countries_by_GDP_(PPP) ? Sources Answer Based on the 2024 GDP (PPP) estimates from the International Monetary Fund provided in the Wikipedia list, the figures are as follows: Russia's GDP (PPP): Russia's estimated GDP for 2024 in PPP terms is $5,284,832 million. Former Warsaw Pact nations (excluding the USSR): The combined GDP (PPP) of the former Warsaw Pact nations, excluding the USSR, in 2024 is approximately $4,155,605 million. This figure is calculated by summing the GDPs of: Poland: $1,705,038 million Romania: $728,265 million Czech Republic: $511,409 million Hungary: $394,877 million Bulgaria: $211,709 million Slovakia: $228,407 million East Germany (1/5 of Germany's GDP): $375,900 million (Germany's total: $1,879,500 million) The total GDP (PPP) of these former Warsaw Pact nations is about 78.6% of Russia's GDP (PPP) for 2024. This comparison shows significant economic development in these countries since the dissolution of the Warsaw Pact, as their combined economic output in purchasing power parity terms is now approaching that of Russia. Of course, I could have copied the Wikipedia chart into Calc and done some massaging to get the result, but with a well-framed question and an appropriate source of information, you can save a lot of time. Edited 2025-01-30 02:52 by lizby PicoMite, Armmite F4, SensorKits, MMBasic Hardware, Games, etc. on fruitoftheshed |

||||

| matherp Guru Joined: 11/12/2012 Location: United KingdomPosts: 10892 |

As I explained in the original post, this is a function of the Chinese app and/or controls in China. The offline version of DeepSeek will answer this question correctly. I choose not to post the answer on a computer forum. It also answers the "woman" question perfectly sensibly. As for Lizby's question. This is what I got from a purely local version of DeepSeek (network unplugged) 13billion parameter version Edited 2025-01-30 03:22 by matherp |

||||

| Mixtel90 Guru Joined: 05/10/2019 Location: United KingdomPosts: 8459 |

I just wish there was an alternative word. "Intelligent" is not what it is. That implies being able to reason and understand. AI does neither. It merely links database entries according to algorithms. There is no understanding there and any "reasoning" is based on the database entries that it finds, not on what they mean. Does it "learn"? No, I don't think so. It refines the algorithms in response to people correcting it, but is that really learning? Absorbing knowledge without understanding it merely leads to a bigger database. There is a *hope* that if the database is big enough then the percentage of accurate results produced will be higher. That won't always be true though, the amount of false, fake and simply wrong information is also growing. AI is "Artificial" though, so I suppose 50% is about the same accuracy. :) Mick Zilog Inside! nascom.info for Nascom & Gemini Preliminary MMBasic docs & my PCB designs |

||||

| lizby Guru Joined: 17/05/2016 Location: United StatesPosts: 3565 |

Interesting. Getting into subject matter as opposed to general AI capabilities, I asked: were albania, Yugoslavia, or cuba part of the warsaw pact? Answer Albania, Yugoslavia, and Cuba were not part of the Warsaw Pact, with some nuances: Albania: Albania was initially a founding member of the Warsaw Pact in 1955. However, it officially withdrew from the pact on September 13, 1968, in protest of the invasion of Czechoslovakia1. Yugoslavia: Yugoslavia was never a member of the Warsaw Pact. It remained non-aligned, acting as a buffer between NATO and the Warsaw Pact countries. Cuba: Cuba was not a member of the Warsaw Pact. However, it had close ties with the Soviet Union and participated in some Soviet-led organizations: ... In summary, by the time the Warsaw Pact dissolved in 1991, none of these three countries were members of the organization. So, as always (at present), one should have some idea of what the correct answer to a question is. I see an incredibly useful tool (in prospect), and I have barely scratched the surface. PicoMite, Armmite F4, SensorKits, MMBasic Hardware, Games, etc. on fruitoftheshed |

||||

| matherp Guru Joined: 11/12/2012 Location: United KingdomPosts: 10892 |

Running the 32Billion DeepSeek model I get |

||||

| lizby Guru Joined: 17/05/2016 Location: United StatesPosts: 3565 |

Excellent. 32 billion data points is better than 13 billion. I may have to get a DeepSeek-only mini-PC. I wonder what the cheapest one is that would provide decent performance. I haven't used anything but a laptop for about 20 years. ~ Edited 2025-01-30 05:27 by lizby PicoMite, Armmite F4, SensorKits, MMBasic Hardware, Games, etc. on fruitoftheshed |

||||

| matherp Guru Joined: 11/12/2012 Location: United KingdomPosts: 10892 |

The critical thing for running these things is the graphics card. I've got a RTX A4000 on a 32GB PC with I7-12700. The graphics has 16GB of VRAM and the models max this out. Graphics CPU runs about 30% when answering. The more VRAM the better. Also nothing AMD, Nvidia have conerned the market at the moment |

||||

| lizby Guru Joined: 17/05/2016 Location: United StatesPosts: 3565 |

PNY NVIDIA RTX A4000 graphics card $799.99. That's about 4 times what I paid for my perfectly adequate (for my needs) used i7 12GB Thinkpad T440S. Guess for now I'll stick with what's available online. PicoMite, Armmite F4, SensorKits, MMBasic Hardware, Games, etc. on fruitoftheshed |

||||

TassyJim Guru Joined: 07/08/2011 Location: AustraliaPosts: 6436 |

At my stage in life, I prefer to do my own thinking if only to prove to myself that I still can. Not very good and not very fast, but all my own thoughts. I will leave AI to solve the climate change mess we have created and then start on world peace. Not forgetting even more cat videos. Jim VK7JH MMedit |

||||

| phil99 Guru Joined: 11/02/2018 Location: AustraliaPosts: 2950 |

Linking Mick's comment about AI not being intelligent with Jim's last comment, AI doesn't need to be intelligent to be smarter than us! |

||||

| PhenixRising Guru Joined: 07/11/2023 Location: United KingdomPosts: 1702 |

Proving to be very useful but intelligent? Nah! |

||||

| Page 1 of 2 |

|||||

| The Back Shed's forum code is written, and hosted, in Australia. | © JAQ Software 2026 |